How to integrate AEM and SOLR so that an AEM component can use SOLR to perform searches, as shown in the following illustration.

The following list compares developing an external search platform using Solr to Oak indexing. The following are benefits:

• Full control over the Solr document model

• Control over boosting specific fields in Solr document

• Real time indexing is within your control

• Comes handy when multiple heterogeneous systems are contributing for indexing.

Create project from scratch

·

Project

creation using eclipse plugin.

·

Project

creation using maven – archetype.

·

Deployment

in AEM instance.

Create project using

eclipse:

Download the

eclipse from below location.

https://github.com/Adobe-Marketing-Cloud/aem-project-archetype

Properties

to be remember while creating AEM Projects.

Create Project using

maven archetype:

Archetype creates a minimal Adobe Experience Manager project

as a starting point for your own projects. The properties that must be provided

when using this archetype allow to name as desired all parts of this project.

Maven command to run the project:

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate -DarchetypeGroupId=com.adobe.granite.archetypes

-DarchetypeArtifactId=aem-project-archetype -DarchetypeVersion=13

-DarchetypeCatalog=https://repo.adobe.com/nexus/content/groups/public/

Deployment in AEM

instance

Provided Maven profiles

autoInstallBundle: Install

core bundle with the maven-sling-plugin to the felix console.

autoInstallPackage:

Install the ui.content and ui.apps content package with the

content-package-maven-plugin to the package manager to default author instance

on localhost, port 4502. Hostname and port can be changed with the aem.host and

aem.port user defined properties.

autoInstallPackagePublish:

Install the ui.content and ui.apps content package with the

content-package-maven-plugin to the package manager to default publish instance

on localhost, port 4503. Hostname and port can be changed with the aem.host and

aem.port user defined properties.

Go to the project

source parent folder, and execute one of the below command on command prompt:

1. >mvn clean install

It will compile the full project but would not deploy on your instances.

Below files should be manually upload to package manager and install.

/ui.apps/target/***.zip

/ui.content/target/***.zip

2. >mvn clean install

-PautoInstallPackage -Padobe-public

Note: Check the pom.xml file for author port.

3. >mvn clean install

-PautoInstallBundle

Auto install the bundle only.

4. >mvn clean install -PautoInstallPackagePublish

-Padobe-public

Note: Check the pom.xml file for author port.

Archetype provides the following modules –>

Core : Core bundle (java code goes here)

it.launcher - Baseline bundle to support Integration Tests with AEM

it.test - Integrations tests

ui.apps - Module for your components,template etc code.

ui.content - Project sample/test content or may be actual content

(actual content in codebase is not a good practice)

Notes:

In short, Archetype is a Maven

project templating toolkit. An archetype is defined as an original

pattern or model from which all other things of the same kind are made.

POM is fundamental unit of maven

which resides in the root directory of your project and contains the

information about the project and various configuration details used by maven

to build the project. So before creating a project , we should first decide the

project group (groupId), artifactId and its version as these

attributes help in uniquely identifying the project in maven repository.

Create Solr_search component.

Create Java files and what is the use each one for implementing solr-facet search in aem.

go to core.

SolrSearchService (a Java interface)

SOLRSEARCHSERVICE INTERFACE

The SolrSearchService interface describes the operations exposed by this service. The following Java code represents this interface.

Creating a workflow for indexing.

Build the OSGi bundle using Maven

To build the OSGi bundle by using Maven, perform these steps:

- Open the command prompt and go to aem-solr-article.

- Run the following maven command: mvn clean install.

- The OSGi component can be found in the following folder: aem-solr-article\core\target. The file name of the OSGi component is solr.core-1.0-SNAPSHOT.jar.

- Install the OSGi using the Felix console.

Setup the Solr Server

Download and install the Solr server (solr-6.2.0.zip ) from the following URL:

then create a new core.

Configure AEM to use Solr Server

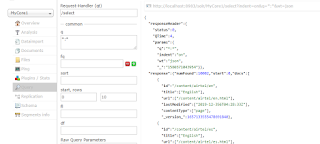

Configure AEM to use Solr server. Go to the following URL:

Search for AEM Solr Search - Solr Configuration Service and enter the following values:

- Protocol - http

- Solr Server name - localhost

- Solr Server Port: 8983

- Solr Core Name - collection (references the collection you created)

Select core from the drop-down control and select Query. Then click the Execute button. If successful, you will see the result set.

View Solr results in an AEM component

In CRXDE lite, open the following HTML file:

/apps/solr/components/content/solrsearch/solrsearch.html

and add the following script at the top of the page:

<script src="https://code.jquery.com/jquery-3.1.0.js" integrity="sha256-slogkvB1K3VOkzAI8QITxV3VzpOnkeNVsKvtkYLMjfk=" crossorigin="anonymous"></script>

To access the component that display Solr values, enter the following URL: